Spearman’s Theory of Intelligence, proposed by psychologist Charles Spearman in the early 20th century, is one of the most influential and controversial theories in the field of intelligence. This theory attempts to explain the concept of intelligence and how it can be measured and understood. It suggests that intelligence is a single, general mental ability that underlies all cognitive tasks, rather than a collection of specific abilities. In this introduction, we will delve into the concept and explanation behind Spearman’s Theory of Intelligence, exploring its key components and implications for understanding human intelligence.

The g factor, where g stands for general intelligence, is a statistic used in psychometrics in an attempt to quantify the mental ability underlying results of various tests of cognitive ability. The existence of such an underlying g factor was postulated in 1904 by Charles Spearman.

Spearman, who was an early psychometrician, found that schoolchildren’s grades across seemingly unrelated subjects were positively correlated, and proposed that these correlations reflected the influence of a dominant factor, which he termed g for “general” intelligence or ability. He developed a model in which all variations in intelligence test scores are explained by two factors: first, a factor specific to an individual mental task: the individual abilities that would make a person more skilled at a specific cognitive task; and second a general factor g that governs performance on all cognitive tasks.

There is some debate about whether g represents a real thing or is just a sort of statistical average of test results. The accumulation of cognitive testing data and improvements in analytical techniques have preserved g’s central role but have led to a modern conception of g. According to the American Psychological Association, a hierarchy of factors with g at its apex and group factors at successively lower levels is now the most widely accepted model of cognitive ability. Other models have also been proposed, and significant controversy attends g and its alternatives.

Mental testing and g

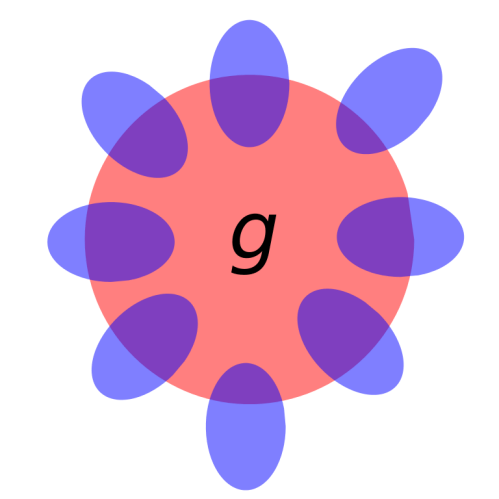

An illustration of Spearman’s two-factor intelligence theory. Each small oval is a hypothetical mental test. The blue areas show the variance attributed to s, and the purple areas the variance attributed to g.

There are many different kinds of IQ tests using a wide variety of methods. Some tests are visual, some are verbal, some tests only use of abstract-reasoning problems, and some tests concentrate on arithmetic, spatial imagery, reading, vocabulary, memory or general knowledge. The psychologist Charles Spearman in 1904 made the first formal factor analysis of correlations between the tests. He found that a single common factor explained for the positive correlations among test. This is an argument still accepted in principle by many psychometricians. Spearman named it g for “general intelligence factor”. In any collections of IQ tests, by definition the test that best measures g is the one that has the highest correlations with all the others. Most of these g-loaded tests typically involve some form of abstract reasoning. Therefore Spearman and others have regarded g as the perhaps genetically determined real essence of intelligence. This is still a common but not proven view. Other factor analyses of the data are with different results are possible. Some psychometricians regard g as a statistical artifact. The accepted best measure of g is Raven’s Progressive Matrices which is a test of visual reasoning.

The relationship of g to intelligence tests may be more readily understood with an analogy. Irregular objects, such as the human body, are said to vary in “size”. Yet no single measurement of a human body is obviously preferred to measure its “size”. Instead, many and various measurements, such as those taken by a tailor, may be made. All of these measurements will be positively correlated with each other, and if one were to “add up” or combine all of the measurements, the aggregate would arguably give a better description of an individual’s size than any single measurement. The method of factor analysis allows this.

The process is intuitively similar to taking the average of a sample of measurements of a single variable, but instead “size” is a summary measure of a sample of variables. g is similar, in that it is abstracted from various measures (of cognitive ability). Of course, variation in “size” does not fully account for all variation in the measurements of a human body. Factor analysis techniques are not limited to producing single factors, and an analysis of human bodies might produce (for example) two major factors, such as height and girth. However, the scores of tests of cognitive ability do in fact produce a primary factor, g.

The correlation between a test and g is referred to as the test’s g-loading. In other words, the g-loading of a test is a measure of how much of the test result variance can be attributed to variation in g. Creators of IQ tests, whose goals are generally to create highly reliable and valid tests, have thus made their tests as g-loaded as possible. Historically, this has meant dampening the influence of group factors (factors influencing certain groups of tests) by testing as wide a range of mental tasks as possible. However, tests such as Raven’s Progressive Matrices are considered to be the most g – loaded in existence, even though Raven’s is quite homogeneous in the types of tasks comprising it. However, there are substantial gender differences on the Raven’s which are not found when g is computed from a broad collection of tests.

Mental chronometry and g

Elementary cognitive tasks (ECTs) also correlate strongly with g. ECTs are, as the name suggests, simple tasks that apparently require very little intelligence, but still correlate strongly with more exhaustive intelligence tests. Determining whether a light is red or blue and determining whether there are four or five squares drawn on a computer screen are two examples of ECTs. The answers to such questions are usually provided by quickly pressing buttons. Often, in addition to buttons for the two options provided, a third button is held down from the start of the test. When the stimulus is given to the subject, he removes his hand from the starting button to the button of the correct answer. This allows the examiner to determine how much time was spent thinking about the answer to the question (reaction time, usually measured in small fractions of second), and how much time was spent on physical hand movement to the correct button (movement time). Reaction time correlates strongly with g, while movement time correlates less strongly. ECT testing has allowed quantitative examination of hypotheses concerning test bias, subject motivation, and group differences. By virtue of their simplicity, ECTs provide a link between classical IQ testing and biological inquiries such as fMRI studies.

Ability differentiation hypothesis

A number of researchers have suggested that the proportion of variation accounted for by g may not be uniform across all individuals within a population. Spearman’s law of diminishing returns (SLDR), also termed the ability differentiation hypothesis, predicts that the positive correlations among different cognitive abilities are weaker among more intelligent subgroups of individuals. More specifically, SLDR predicts that the the g factor will account for a smaller proportion of individual differences in cognitive tests scores at higher scores on the g factor.

SLDR was originally proposed by Charles Spearman, who reported that the average correlation between 12 cognitive ability tests was .466 in 78 normal children, and .782 in 22 “defective” children. Detterman and Daniel rediscovered this phenomenon in 1989. They reported that for subtests of both the WAIS and the WISC, subtest intercorrelations decreased monotonically with ability group, ranging from approximately an average intercorrelation of .7 among individuals with IQs less than 78 to .4 among individuals with IQs greater than 122.

SLDR has been replicated in a variety of child and adult samples who have been measured using broad arrays of cognitive tests. The most common approach has been to divide individuals into multiple ability groups using an observable proxy for their general intellectual ability, and then to either compare the average interrelation among the subtests across the different groups, or to compare the proportion of variation accounted for by a single common factor, in the different groups. However, as both Deary et al. (1996) and Tucker-Drob (2009) have pointed out, dividing the continuous distribution of intelligence into an arbitrary number of discrete ability groups is less than ideal for examining SLDR. Tucker-Drob (2009) extensively reviewed the literature on SLDR and the various methods by which it had been previously tested, and proposed that SLDR could be most appropriately captured by fitting a common factor model that allows the relations between the factor and its indicators to be nonlinear in nature. He applied such a factor model to a nationally representative data of children and adults in the United States and found consistent evidence for SLDR. For example, Tucker-Drob (2009) found that a general factor accounted for approximately 75% of the variation in seven different cognitive abilities among very low IQ adults, but only accounted for approximately 30% of the variation in the abilities among very high IQ adults.

Biological and genetic correlates of g

The g-load of a test correlates with its heritability which Jensen has seen as supporting a genetic g as opposed to it being a statistical artifact. The degree of heritability can be estimated by, for example, examining how much inbreeding depression from cousin marriages affect a test or how much siblings differ from one another on a test.

g has a large number of biological correlates. Strong correlates include mass of the prefrontal lobe, overall brain mass, and glucose metabolization rate within the brain, and cortical thickness. g correlates less strongly, but significantly, with overall body size. There is conflicting evidence regarding the correlation between g and peripheral nerve conduction velocity, with some reports of significant positive correlations, and others of no or even negative correlations. Some research has found the g completely mediates the relation between IQ and cortical thickness. Current research suggests that the heritability of g is approximately 0.85 – even higher than that for IQ itself – so the heritability of most test performance is thus attributable to g.

Brain size has long been known to be correlated with g. Recently, an MRI study on twins showed that frontal gray matter volume was highly significantly correlated with g and highly heritable. A related study has reported that the correlation between brain size (reported to have a heritability of 0.85) and g is 0.4, and that correlation is mediated entirely by genetic factors. g has been observed in mice as well as humans.

Lehrl and Fischer (1990) have claimed that g is limited by the channel capacity of short-term memory. Mental power, or the capacity C of short-term memory (measured in bits of information), is the product of the individual mental speed Ck of information processing (in bit/s), and the duration time D (in s) of information in short-term working memory, meaning the duration of memory span. Hence:

C (bit) = Ck(bit/s) × D (s).

This theory has been tested and found wanting by Roberts et al. (1992). There is much evidence that g is closely related to measures of the capacity of working memory, but this capacity can not be measured in bits of information.

However recent studies attempting to find regions in the genome relating to intelligence have had little success. A recent study used several hundred people in two groups, one with a very high IQ, average 160, and a control group with an average IQ of 102. The study used 1,842 DNA markers and put them through a five step inspection process to eliminate false positives. By the fifth step the study could not find a single gene that was related to intelligence. Critics of these studies say the failure to find a specific gene associated with intelligence is indicative of the complex nature of intelligence. They contend that intelligence is probably under the influence of several genes. Some estimate that as much as 40% of the genome may contribute to intelligence.

A research group from Japan has recently claimed to have found evidence which supports the view that g is a highly genetically driven causal aspect of the brain:

Accordingly, our findings could furnish an argument against the typical criticisms offered by those who are opposed to the concept of g; in other words, g is an “artifact” (Simon, 1969) of the statistical methods that psychologists apply to the data. Gould (1981) argued that g, as a factor extracted from the factor analysis, is neither a “thing with physical reality” nor a “causal entity”, but is a “mathematical abstraction”, maintaining that “we cannot reify g as a ‘thing’ unless we have convincing, independent information beyond the fact of correlation itself.” Although the present study also draws information from correlations, we were able to depict the structure of human intelligence beyond the fact of phenotypic and genetic correlations with an explicit comparison between the independent pathway and the common pathway model; and as a “causal entity”, as a highly genetically driven entity.

Social correlates of g

Most measures of g positively correlate with conventional measures of success (income, academic achievement, job performance, career prestige) and negatively correlate with what are generally seen as undesirable life outcomes (school dropout, unplanned childbearing, poverty). Many studies suggest that specific cognitive abilities tapped by IQ tests do not predict job performance better than g alone. Research on differences in g between ethnic groups (see race and intelligence) has often sparked public controversy.

The Flynn effect and g

The Flynn effect describes a rise in IQ scores over time. There is no strong consensus as to whether rising IQ scores also reflect increases in g. In addition, there is recent evidence that the tendency for intelligence scores to rise has ended in some first world countries. Statistical analyses of IQ subtest scores suggest a g-independent input to the Flynn effect.

g and more narrow abilities

Spearman argued that while there is a single global ability called g there are also smaller, specific factors or abilities for specific areas, labeled s. The Cattell-Horn-Carroll theory, which has greatly influenced many of the current IQ tests, see g at the top of a hiearchy of mental abilities.

Challenges to g

In 1981, paleontologist and biologist Stephen Jay Gould voiced his objections to the concept of g, as well as intelligence testing in general, in his The Mismeasure of Man. Peter Schönemann has also argued for the non-existence of g.

Several researchers have argued that even if g was replaced by a model with several intelligences this would change the situation less than some may expect. All tests of cognitive ability would continue to be highly correlated with one anther and there would still be group differences on cognitive tests as a group. It may however have implications for some arguments regarding whether such group differences are genetic such as Spearman’s hypothesis.

Bringsjord (2000) argued that the science of mental ability can be thought of as “computationalism” and is “either silly or pointless,” and argued that “Mental ability tests measure differences in tasks that will soon be performed for all of us by computational agents.”

Howard Gardner contends that the rare condition of savant syndrome argues against a single generalized intelligence. People with savant syndrome may have general IQs in the mentally retarded range but may possess certain mental abilities that are remarkable compared to the average person. These abilities include superior memory, extremely fast arithmetic calculation, advanced musical ability, rapid language learning and exceptional artistic ability. However, g does not prohibit the existence of more narrow abilities and there are a number of theories explaining savant syndrome that are not incompatible with g.

An alternative interpretation was recently advanced by van der Maas and colleagues in 2006. Their mutualism model assumes that intelligence depends on several independent mechanisms, none of which influences performance on all cognitive tests. These mechanisms support each other so that efficient operation of one of them makes efficient operation of the others more likely, thereby creating the positive correlations between intelligence tests. Rushton and Jensen argue that evidence for a genetic g, such as correlations between g-loadings and heritability of subtests, is problematic for the mutualism theory.