Attenuation Theory, also known as the Filter Theory, is a psychological concept that explains how information is filtered and processed in the human brain. It suggests that individuals selectively attend to certain stimuli while ignoring others, based on personal characteristics and situational factors. This theory has had a significant impact on academic research, particularly in the fields of communication and media studies. By understanding how information is filtered and perceived by individuals, researchers are able to better design their studies and interpret their findings. In this essay, we will explore the impact of Attenuation Theory on academic research and discuss its implications for understanding human behavior and communication.

Attenuation theory is a model of selective attention proposed by Anne Treisman, and can be seen as a revision of Donald Broadbent’s filter model. Treisman proposed attenuation theory as a means to explain how unattended stimuli sometimes came to be processed in a more rigorous manner than what Broadbent’s filter model could account for. As a result, attenuation theory added layers of sophistication to Broadbent’s original idea of how selective attention might operate: claiming that instead of a filter which barred unattended inputs from ever entering awareness, it was a process of attenuation. Thus, the attenuation of unattended stimuli would make it difficult, but not impossible to extract meaningful content from irrelevant inputs, so long as stimuli still possessed sufficient “strength” after attenuation to make it through a hierarchical analysis process.

Brief Overview and Previous Research

Selective attention theories are aimed at explaining why and how individuals tend to process only certain parts of the world surrounding them, while ignoring others. Given that sensory information is constantly besieging us from the five sensory modalities, it was of interest to not only pinpoint where selection of attention took place, but also explain how we prioritize and process sensory inputs. Early theories of attention such as those proposed by Broadbent and Treisman took a bottleneck perspective. That is, they inferred that it was impossible to attend to all the sensory information available at any one time due to limited processing capacity. As a result of this limited capacity to process sensory information, there was believed to be a filter that would prevent overload by reducing the amount of information passed on for processing.

Methodology

Early research came from an era primarily focused upon audition and explaining phenomena such as the cocktail party effect. From this stemmed interest about how we can pick and choose to attend to certain sounds in our surroundings, and at a deeper level, how the processing of attended speech signals differ from those not attended to. Auditory attention is often described as the selection of a channel, message, ear, stimulus, or in the more general phrasing used by Treisman, the “selection between inputs”. As audition became the preferred way of examining selective attention, so too did the testing procedures of dichotic listening and shadowing.

Dichotic Listening

Dichotic listening is an experimental procedure used to demonstrate the selective filtering of auditory inputs, and was primarily utilized by Broadbent. In a dichotic listening task, participants would be asked to wear a set of headphones and attend to information presented to both ears (two channels), or a single ear (one channel) while disregarding anything presented in the opposite channel. Upon completion of a listening task, participants would then be asked to recall any details noticed about the unattended channel.

Shadowing

Shadowing can be seen as an elaboration upon dichotic listening. In shadowing, participants go through largely the same process, only this time they are tasked with repeating aloud information heard in the attended ear as it is being presented. This recitation of information is carried out so that the experimenters can verify participants are attending to the correct channel, and the number of words perceived (recited) correctly can be scored for later use as a dependent variable. Due to its live rehearsal characteristic, shadowing is a more versatile testing procedure because manipulations to channels and their immediate results can be witnessed in real time. It is also favored for being more accurate since shadowing is less dependent upon participants’ ability to recall words heard correctly.

Broadbent’s Filter Model as a Stepping Stone

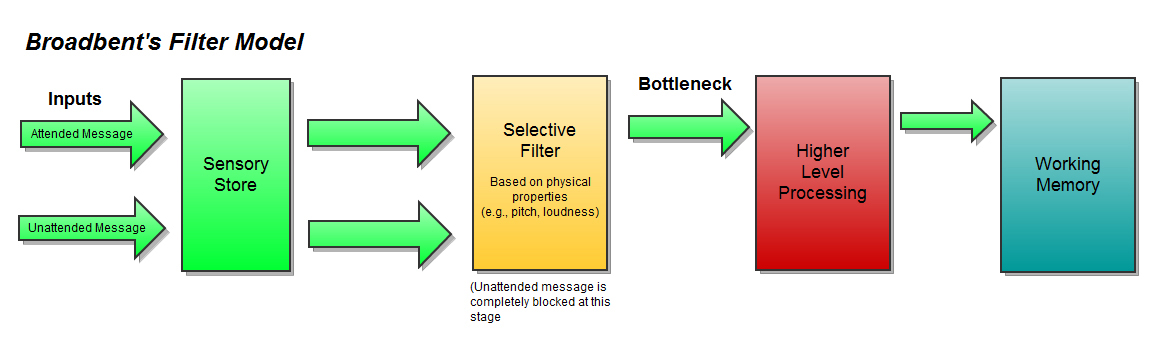

Information processing model of Broadbent’s filter

Donald Broadbent’s filter model is the earliest bottleneck theory of attention and served as a foundation for which Anne Treisman would later build her model of attenuation upon. Broadbent proposed the idea that the mind could only work with so much sensory input at any given time, and as a result, there must be a filter that allows us to selectively attend to things while blocking others out. It was posited that this filter preceded pattern recognition of stimuli, and that attention dictated what information reached the pattern recognition stage by controlling whether or not inputs were filtered out.

The first stage of the filtration process extracts physical properties for all stimuli in parallel manner. The second stage was claimed to be of limited capacity, and so this is where the selective filter was believed to reside in order to protect from a sensory processing overload. Based upon the physical properties extracted at the initial stage, the filter would allow only those stimuli possessing certain criterion features (e.g., pitch, loudness, location) to pass through. According to Broadbent, any information not being attended to would be filtered out, and should be processed only insofar as the physical qualities necessitated by the filter. Since selection was sensitive to physical properties alone, this was thought to be the reason why people possessed so little knowledge regarding the contents of an unattended message. All higher level processing, such as the extraction of meaning, happens post-filter. Thus, information on the unattended channel should not be comprehended. As a consequence, events such as hearing one’s own name when not paying attention should be an impossibility since this information should be filtered out before you can process its meaning.

Criticisms Leading to a Theory of Attenuation

As noted above, the filter model of attention runs into difficulty when attempting to explain how it is that we come to extract meaning from an event that we should be otherwise unaware of. For this reason, and as illustrated by the examples below, Treisman proposed attenuation theory as a means to explain how unattended stimuli sometimes came to be processed in a more rigorous manner than what Broadbent’s filter model could account for.

For two messages identical in content, it has been shown that by varying the time interval between the onset of the irrelevant message in relation to the attended message, participants may notice the message duplicity.

When participants were presented with the message “you may now stop” in the unattended ear, a significant number do so.

In a classic demonstration of the cocktail party phenomenon, participants who had their own name presented to them via the unattended ear often remark about having heard it.

Participants with training or practice can more effectively perceive content from the unattended channel while attending to another.

Semantic processing of unattended stimuli has been demonstrated by altering the contextual relevance of words presented to the unattended ear. Participants heard words from the unattended ear more regularly if they were high in contextual relevance to the attended message.

Attenuation Model of Selective Attention

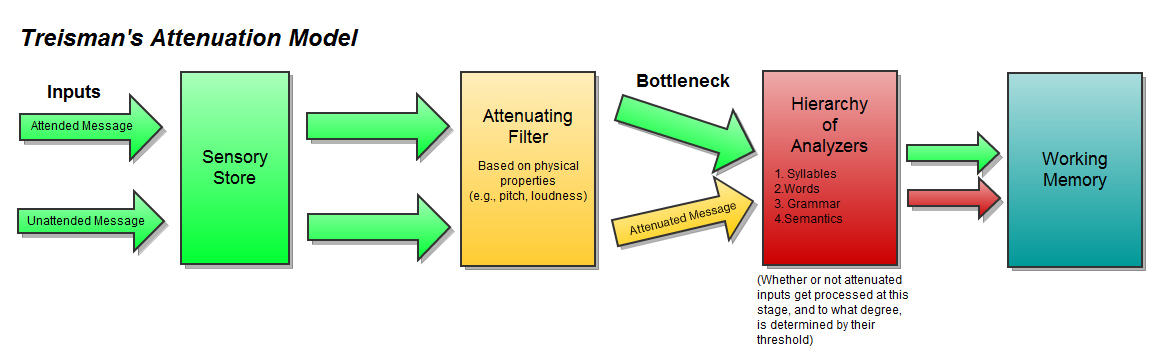

Information processing model of Treisman’s Attenuation theory

How Attenuation Occurs

Treisman’s attenuation model of selective attention retains both the idea of an early selection process, as well as the mechanism by which physical cues are used as the primary point of discrimination. However, unlike Broadbent’s model, the filter now attenuates unattended information instead of filtering it out completely. Treisman further elaborated upon this model by introducing the concept of a threshold to explain how some words came to be heard in the unattended channel with greater frequency than others. Every word was believed to contain its own threshold that dictated the likelihood that it would be perceived after attenuation.

After the initial phase of attenuation, information is then passed on to a hierarchy of analyzers that perform higher level processes to extract more meaningful content (see “Hierarchical analyzers” section below). The crucial aspect of attenuation theory is that attended inputs will always undergo full processing, whereas irrelevant stimuli often lack a sufficiently low threshold to be fully analyzed, resulting in only physical qualities being remembered rather than semantics. Additionally, attenuation and then subsequent stimuli processing is dictated by the current demands on the processing system. It is often the case that not enough resources are present to thoroughly process unattended inputs.

Recognition Threshold

The operation of the recognition threshold is simple: for every possible input, an individual has a certain threshold or “amount of activation required” in order to perceive it. The lower this threshold, the more easily and likely an input is to be perceived, even after undergoing attenuation.

Threshold Affectors

Context and Priming

Context plays a key role in reducing the threshold required to recognize stimuli by creating an expectancy for related information. Context acts by a mechanism of priming, wherein related information becomes momentarily more pertinent and accessible – lowering the threshold for recognition in the process. An example of this can be seen in the statement “the recess bell rang”, where the word rang and its synonyms would experience a lowered threshold due to the priming facilitated by the words that precede it.

Subjective Importance

Words that possess subjective importance (e.g., help, fire) will have a lower threshold than those that do not. Words of great individual importance, such as your own name, will have a permanently low threshold and will be able to come into awareness under almost all circumstances. On the other hand, some words are more variable in their individual meaning, and rely upon their frequency of use, context, and continuity with the attended message in order to be perceived.

Degree of Attenuation

The degree of attenuation can change in relation to the content of the underlying message; with larger amounts of attenuation taking place for incoherent messages that possess little benefit to the person hearing them. Incoherent messages receive the greatest amounts of attenuation because any interference they might exhibit upon the attended message would be more detrimental than that of comprehensible, or complimentary information. The level of attenuation can have a profound impact on whether an input will be perceived or not, and can dynamically vary depending upon attentional demands.

Hierarchy of Analyzers

The hierarchical system of analysis is one of maximal economy: while facilitating the potential for important, unexpected, or unattended stimuli to be perceived, it ensures that those messages sufficiently attenuated do not get through much more than the earliest stages of analysis, preventing an overburden on sensory processing capacity. If attentional demands (and subsequent processing demands) are low, full hierarchy processing takes place. If demands are high, attenuation becomes more aggressive, and only allows important or relevant information from the unattended message to be processed. The hierarchical analysis process is characterized by a serial nature, yielding a unique result for each word or piece of data analyzed. Attenuated information passes through all the analyzers only if the threshold has been lowered in their favor, if not, information only passes insofar as its threshold allows.

The nervous system sequentially analyzes an input, starting with the general physical features such as pitch and loudness, followed by identifications of words and meaning (e.g., syllables, words, grammar and semantics). The hierarchical process also serves an essential purpose if inputs are identical in terms of voice, amplitude, and spatial cues. Should all of these physical characteristics be identical between messages, then attenuation can not effectively take place at an early level based on these properties. Instead, attenuation will occur during the identification of words and meaning, and this is where the capacity to handle information can be scarce.

Evidence for Attenuation Theory

Following Messages to the Unattended Ear

During shadowing experiments, Treisman would present a unique stream of prosaic stimuli to each ear. Sometime during shadowing, the stimuli would then swap over to the opposite side so that the formerly shadowed message was now presented to the unattended ear. Participants would often “follow” the message over to the unattended ear before realizing their mistake, especially if the stimuli had a high degree of continuity. This “following of the message” illustrates how the unattended ear is still extracting some degree of information from the unattended channel, and contradicts Broadbent’s filter model that would expect participants to be completely oblivious of the change in the unattended channel.

Manipulating the Onset of Messages

In a series of experiments carried out by Treisman (1964), two messages identical in content would be played, and the amount of time between the onset of the irrelevant message in relation to the shadowed message would be varied. Participants were never informed of the message duplicity, and the time lag between messages would be altered until participants remarked about the similarity. If the irrelevant message was allowed to lead, it was found that the time gap could not exceed 1.4 seconds. This was believed to be a result of the irrelevant message undergoing attenuation and receiving no processing beyond the physical level. This lack of deep processing necessitates the irrelevant message be held in the sensory store before comparison to the shadowed message, making it vulnerable to decay. In contrast, when the shadowed message led, the irrelevant message could lag behind it by as much as five seconds and participants could still perceive the similarity. This shows that the shadowed message is not decaying as quickly, and coincides with what attenuation theory would predict: the shadowed message receives no attenuation, undergoes full processing, and then gets passed on to working memory where it can be held for a comparatively longer duration than the unattended message in the sensory store.

Variations upon this method involved using identical messages spoken in different voices (e.g., gender), or manipulating whether the message was composed of non-words to examine the effect of not being able to extract meaning. In all cases, support was found for a theory of attenuation.

Bilingual Shadowing

Bilingual students were found to recognize that a message presented to the unattended channel was the same as the one being attended to, even when presented in a different language. This was achieved by having participants shadow a message presented in English, while playing the same message in French to the unattended ear. Once again, this shows extraction of meaningful information from the speech signal above and beyond physical characteristics alone.

Electrical Shock and Unattended Words

Corteen and Dunn (1974) paired electrical shock with target words. The electric shocks were presented at very low intensity, so low that the participants did not know when the shock occurred. It was found that if these words were later presented in the absence of shock, participants would respond automatically with a galvanic skin response (GSR) even when played in the unattended ear. Furthermore, GSR’s were found to generalize to synonyms of unattended target words, implying that word processing was taking place at a level deeper than what Broadbent’s model would predict.

Event-related Potentials of Irrelevant Stimuli

Von Voorhis and Hillyard (1977) used an EEG to observe event-related potentials (ERPs) of visual stimuli. Participants were asked to attend to, or disregard specific stimuli presented. Results demonstrated that when attending to visual stimuli, the amount of voltage fluctuation was greater at occipital sites for attended stimuli when compared to unattended stimuli. Voltage modulations were observed after 100ms of stimuli onset, consistent with what would be predicted by attenuation of irrelevant inputs.

Effects of Attentional Demand on Brain Activity

In a fMRI study that examined if meaning was implicitly extracted from unattended words, or if the extraction of meaning could be avoided by simultaneously presenting distracting stimuli; it was found that when competing stimuli create sufficient attentional demand, no brain activity was observed in response to the unattended words, even when directly fixated upon. These results are in keeping with what would be predicted by an attenuation style of selection and run contrary to classical late selection theory.

Competing Theories

In 1963, Deutsch & Deutsch proposed a late selection model of how selective attention operates. They proposed all stimuli get processed in full, with the crucial difference being a filter placed later in the information processing routine, just before the entrance into working memory. The late selection process supposedly operated on the semantic characteristics of a message, barring inputs from memory and subsequent awareness if they did not possess desired content. According to this model, the depreciated awareness of unattended stimuli came from denial into working memory and the controlled generation of responses to it. The Deutsch & Deutsch model was later revised by Norman in 1968, who added that the strength of an input was also an important factor for its selection.

A criticism of both the original Deutsch & Deutsch model, as well as the revised Deutsch–Norman selection model is that all stimuli, including those deemed irrelevant, are processed fully. When contrast against Treisman’s attenuation model, the late selection approach appears wasteful with its thorough processing of all information before selection of admittance into working memory.